【导语】当爆红的 DeepSeek R1 遇上编程界开挂神器 Cline (🏆OpenRouter工具榜TOP1),是1+1>2的超神组合,还是可怕的 Token 黑洞?实测 Debug 全过程,揭秘 Cline + DeepSeek R1 的魔力

Cline 是什么

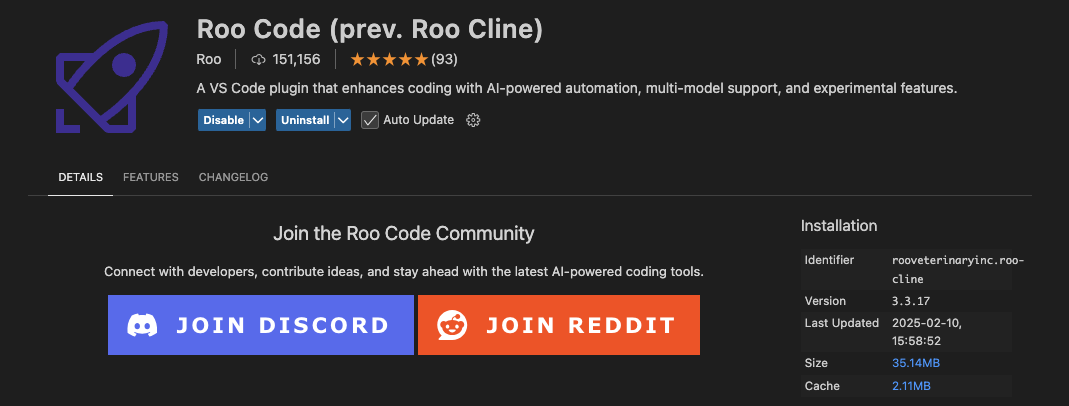

Cline 是一个开源的编程 Agent,支持编辑文件、运行 Terminal 命令、使用 Browser。Cline 可以自定义模型 API,包括OpenAI、Gemini和任意 OpenAI API 兼容的模型供应商,当前也包括在本地 localhost 部署的模型。

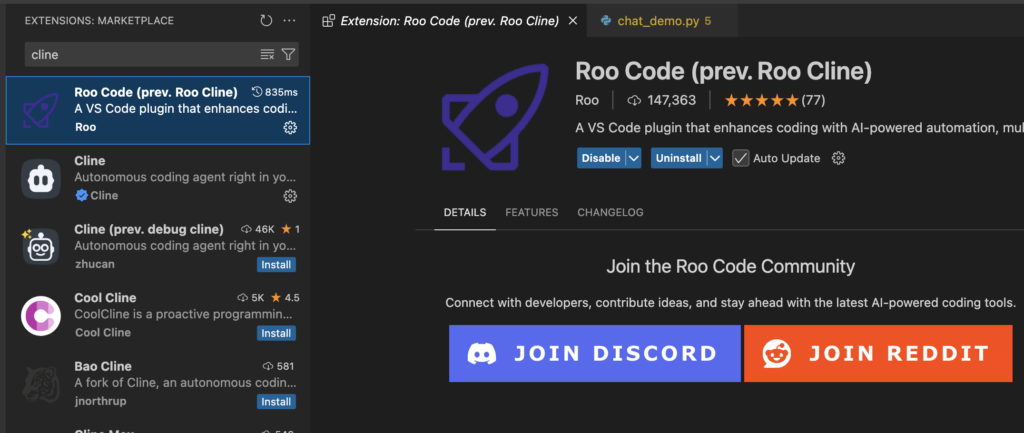

Cline 在 openrouter 中排第一名

1 月底至今,DeepSeek R1 火爆全球,Cline 官方也专门支持了 DeepSeek R1 模型。兼容了 DeepSeek、Gemini 的 Thinking 输出。

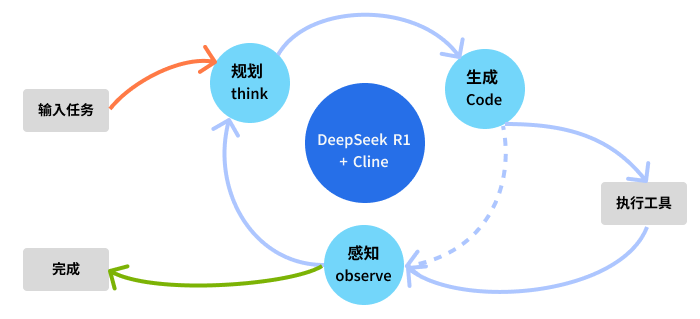

在体验过 DeepSeek + Cline 后,觉得它自主思考规划、自主编程和反馈的模式非常有意思。于是深入研究它是怎么做到的。

在 VS Code 里使用 Cline

Cline 使用 Apache 2.0 开源协议,有很多 fork 版本。其中 Roo Code(原名是 Roo Cline)是最火的版本。我使用了 Roo Code。此外简单试用了原生版 Cline,感觉体验远不如 Roo Code。

在本地启动一个 deepseek r1 模型 provider 的 proxy,代理 Cline 的请求。在 proxy 里打印 Cline 发送的完整 prompt,这样就能观测到 Cline 的内部工作机制了。deepseek r1 使用的是硅基流动的 api 服务。

Cline 的 system prompt 也可以在项目源码中找到,它的 system prompt 大致有 800 行,5 万个字符。但它是静态的,不适合分析 Cline 工作时的行为。所以有了本篇文章

详细拆解 Cline 编程过程

编程任务的 prompt

直接让 Cline + DeepSeek R1 写一个 openai 的 chat demo 程序

write a python demo to chat with api.openai.com

System Prompt

Cline 使用的 system prompt 超级超级长…

{

"model": "deepseek-ai/DeepSeek-R1",

"temperature": 0,

"messages": [

{

"role": "system",

"content": "You are Roo, a highly skilled software engineer ......"

},第 1 轮:输入编程任务

输入这次编程任务的描述,这是第一次输入指令,也是本次测试中的唯一一次手动输入文字

write a python demo to chat with api.openai.com

Cline 真正发送到模型的是 2 段:第一段是 <task> 包裹的输入指令,第二段是环境上下文 <environment_details>

如下 <environment_details> … </environment_details> 所示:

{

"role": "user",

"content": [

{

"type": "text",

"text": "<task>\nwrite a python demo to chat with api.openai.com\n</task>"

},

{

"type": "text",

"text": "<environment_details> ... </environment_details>"

}

]

},其中environment_details 的内容格式化以后如下,包含了 VS Code 当前的编辑器状态等

- VSCode Visible Files:VS Code 可见的所有文件

- VSCode Open Tabs:VS Code 中打开的 Tab 文件

- Current Time:当前时间,但没看出来用途是什么

- Current Context Size (Tokens):当前的上下文长度,应该统计不准

- Current Mode:Cline 插件当前的模式,code 模式是要求模型直接写代码

- Current Working Directory:当前的工作目录 path

<environment_details>

# VSCode Visible Files

README.md

# VSCode Open Tabs

README.md

# Current Time

2/10/2025, 9:55:20 PM (Asia/Shanghai, UTC+8:00)

# Current Context Size (Tokens)

(Not available)

# Current Mode

code

# Current Working Directory (/Users/cc/develop/paper_research/tmp2) Files

README.md

</environment_details>R1 的响应

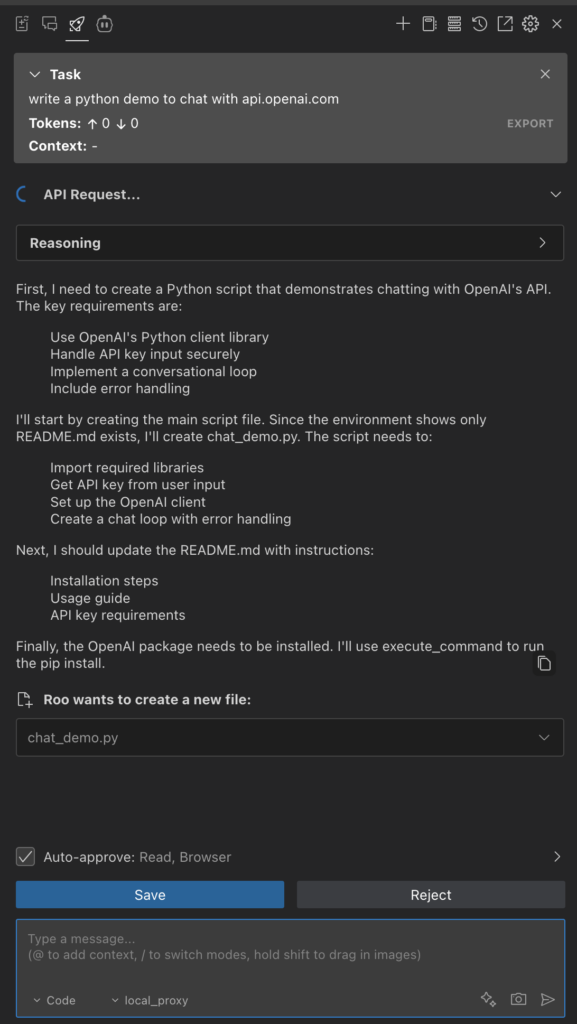

R1 模型先输出了 <thinking> 思考过程,然后调用工具 <write_to_file>, <write_to_file>, <execute_command>, <attempt_completion>。

{

"role": "assistant",

"content": "......"

},有趣的是,R1 模型一次性输出了 2 个文件的内容,并认为已经写完,可以运行命令来验证,并尝试完成任务。

但是最后 R1 被 Cline 打断,因为 Cline 判断到了有多个命令,不符合 system prompt 里的要求

<thinking>

First, I need to create a Python script that demonstrates chatting with OpenAI's API. The key requirements are:

1. Use OpenAI's Python client library

2. Handle API key input securely

3. Implement a conversational loop

4. Include error handling

I'll start by creating the main script file. Since the environment shows only README.md exists, I'll create chat_demo.py. The script needs to:

- Import required libraries

- Get API key from user input

- Set up the OpenAI client

- Create a chat loop with error handling

Next, I should update the README.md with instructions:

- Installation steps

- Usage guide

- API key requirements

Finally, the OpenAI package needs to be installed. I'll use execute_command to run the pip install.

</thinking>

<write_to_file>

<path>chat_demo.py</path>

<content>

import openai

import os

def chat_with_openai():

api_key = input("Enter your OpenAI API key: ").strip()

os.environ["OPENAI_API_KEY"] = api_key

print("Chat with AI (type 'quit' to exit)")

while True:

try:

user_input = input("\nYou: ")

if user_input.lower() == 'quit':

break

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": user_input}]

)

print(f"\nAI: {response.choices[0].message['content']}")

except openai.error.AuthenticationError:

print("Error: Invalid API key. Please check your key and try again.")

break

except Exception as e:

print(f"An error occurred: {str(e)}")

break

if __name__ == "__main__":

chat_with_openai()

</content>

<line_count>27</line_count>

</write_to_file>

<write_to_file>

<path>README.md</path>

<content>

# OpenAI Chat Demo

A simple Python script to demonstrate chat functionality with OpenAI's API.

## Requirements

- Python 3.6+

- OpenAI API key

## Installation

```bash

pip install openai

```

## Usage

1. Get your API key from [OpenAI Platform](https://platform.openai.com/api-keys)

2. Run the script:

`bash

python chat_demo.py

`

3. Enter your API key when prompted

4. Type your messages and press Enter

5. Type 'quit' to exit

## Notes

- API keys are not stored locally

- Uses GPT-3.5-turbo model by default

</content>

<line_count>22</line_count>

</write_to_file>

<execute_command>

<command>pip install openai</command>

</execute_command>

<attempt_completion>

<result>

Created chat demo with secure API key handling and error checking. Installed required dependencies. See README for usage instructions.

</result>

<command>python chat_demo.py</command>

</attempt_com

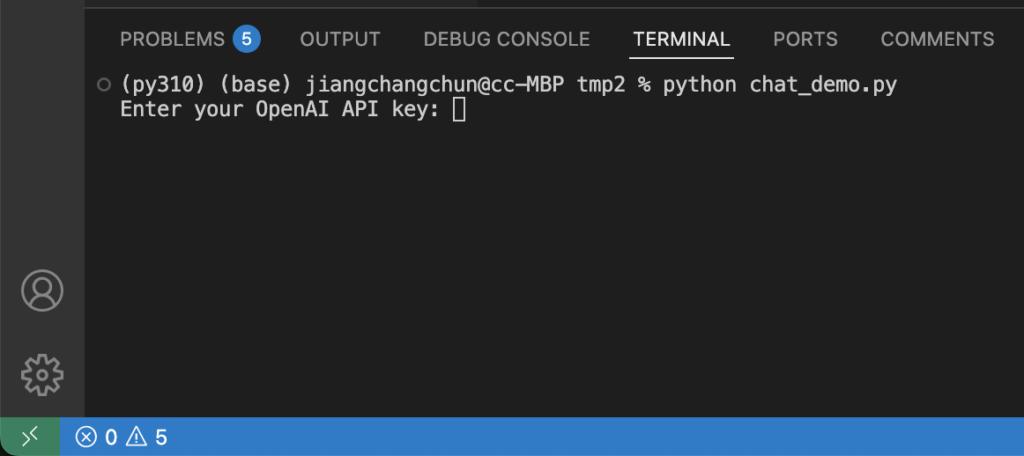

[Response interrupted by a tool use result. Only one tool may be used at a time and should be placed at the end of the message.]在 Cline 的对话框里,Cline 已经编辑好 chat_demo.py 并提示用户 save

点击 Save 以后,自动进入第二轮。来看看第 2 轮做了什么

第 2 轮

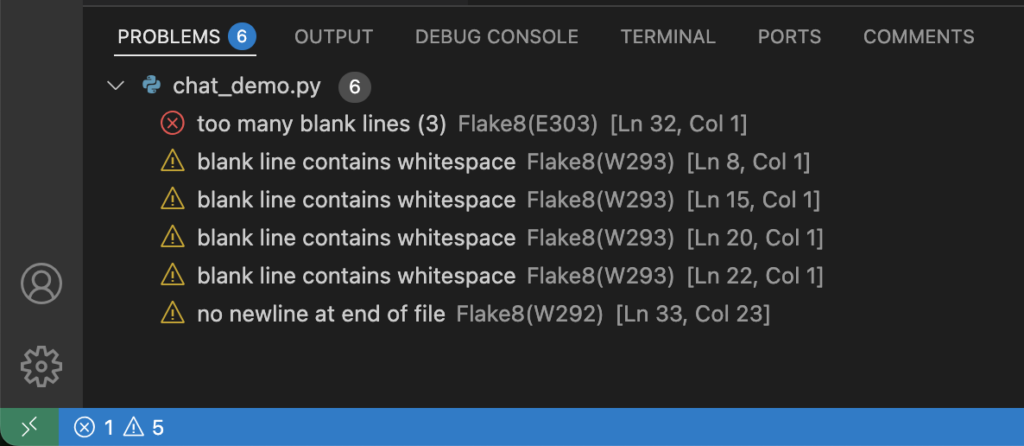

第 2 轮发送了第一个 <write_to_file> 工具调用的执行结果,把它摘出来格式化显示如下:

- 首先是保存成功

- 然后是发现 VS Code 里给了 flake8 的 python 编程规范的报错

正是由于有这个错误,触发了 Cline 继续与 R1 对话,尝试解决问题。这个过程并没有人工的干预。

The content was successfully saved to chat_demo.py.

New problems detected after saving the file:

chat_demo.py

- [Flake8 Error] Line 4: expected 2 blank lines, found 1

- [Flake8 Error] Line 29: expected 2 blank lines after class or function definition, found 1

这个 flake8 的报错在 VS Code 底部的 PROBLEMS 里。如下图所示(注意由于当时忘记截图了,图里的错误并不是本轮对话的错误)。

可见,Cline 可以读取这里的错误反馈给模型

第 2 轮完整的提示如下。包含了 6 部分。其中第 3~5 部分是告知 R1 第二个 <write_to_file> 工具, <execute_command>, <attempt_completion> 工具都没有执行,因为 system prompt 里要求 R1 一个轮对话只能输出一个工具调用命令。

{

"role": "user",

"content": [

{

"type": "text",

"text": "[write_to_file for 'chat_demo.py'] Result:"

},

{

"type": "text",

"text": "......"

},

{

"type": "text",

"text": "Tool [write_to_file] was not executed because a tool has already been used in this message. Only one tool may be used per message. You must assess the first tool's result before proceeding to use the next tool."

},

{

"type": "text",

"text": "Tool [execute_command] was not executed because a tool has already been used in this message. Only one tool may be used per message. You must assess the first tool's result before proceeding to use the next tool."

},

{

"type": "text",

"text": "Tool [attempt_completion] was not executed because a tool has already been used in this message. Only one tool may be used per message. You must assess the first tool's result before proceeding to use the next tool."

},

{

"type": "text",

"text": "<environment_details>\n# VSCode Visible Files\nchat_demo.py\n\n# VSCode Open Tabs\nREADME.md\nchat_demo.py\n\n# Current Time\n2/10/2025, 9:59:55 PM (Asia/Shanghai, UTC+8:00)\n\n# Current Context Size (Tokens)\n11,913,404 (9307%)\n\n# Current Mode\ncode\n</environment_details>"

}

]

}R1 的响应

由于本轮的指令要求非常明确,R1 直接给出了如何修改文件,调用 <apply_diff> 工具,diff 内容是 <<<<<<< SEARCH, >>>>>>> REPLACE 的格式输出。

注意这里的响应只包含了 Line 29 的 flake8 错误的修复 diff,没有包括 Line 4 的修复。因此又触发了 Cline 下一轮对话,来修复 Line 4 的错误

{

"role": "assistant",

"content": "......"

},

<apply_diff>

<path>chat_demo.py</path>

<diff>

<<<<<<< SEARCH

29 |

30 |

=======

29 |

>>>>>>> REPLACE

</diff>

<start_line>29</start_line>

<end_line>30</end_line>

</apply_diff>

<execute_command>

<command>python chat_demo.py</command>

</execute_command>第 3 轮

第 3 轮发送了 <apply_diff> 工具的执行结果:成功,但是还有 Line 4 的错误

New problems detected after saving the file:

chat_demo.py

- [Flake8 Error] Line 30: expected 2 blank lines after class or function definition, found 1第 3 轮完整的 prompt 如下

{

"role": "user",

"content": [

{

"type": "text",

"text": "[apply_diff for 'chat_demo.py'] Result:"

},

{

"type": "text",

"text": "......"

},

{

"type": "text",

"text": "Tool [execute_command] was not executed because a tool has already been used in this message. Only one tool may be used per message. You must assess the first tool's result before proceeding to use the next tool."

},

{

"type": "text",

"text": "<environment_details>\n# VSCode Visible Files\nchat_demo.py\n\n# VSCode Open Tabs\nREADME.md\nchat_demo.py\n\n# Current Time\n2/10/2025, 10:16:26 PM (Asia/Shanghai, UTC+8:00)\n\n# Current Context Size (Tokens)\n5,205,887 (4067%)\n\n# Current Mode\ncode\n</environment_details>"

},

{

"type": "text",

"text": "[TASK RESUMPTION] This task was interrupted just now. It may or may not be complete, so please reassess the task context. Be aware that the project state may have changed since then. The current working directory is now '/Users/cc/develop/paper_research/tmp2'. If the task has not been completed, retry the last step before interruption and proceed with completing the task.\n\nNote: If you previously attempted a tool use that the user did not provide a result for, you should assume the tool use was not successful and assess whether you should retry. If the last tool was a browser_action, the browser has been closed and you must launch a new browser if needed."

},

{

"type": "text",

"text": "<environment_details>\n# VSCode Visible Files\nchat_demo.py\n\n# VSCode Open Tabs\nREADME.md\nchat_demo.py\n\n# Current Time\n2/10/2025, 10:16:44 PM (Asia/Shanghai, UTC+8:00)\n\n# Current Context Size (Tokens)\n5,205,887 (4067%)\n\n# Current Mode\ncode\n\n# Current Working Directory (/Users/cc/develop/paper_research/tmp2) Files\nchat_demo.py\nREADME.md\n</environment_details>"

}

]

},R1 的响应

第 3 轮的响应内容是修复 Line 4 的 flake8 报错。但是同样包含了 2 段 <apply_diff> 来修复 chat_demo.py,以及 <write_to_file> 来更新 README.md 文件,以及 <execute_command>、<attempt_completion> 尝试执行脚本并完成任务。

同样的,R1 的输出被 Cline 打断了,因为包含超过 1 个工具调用。

<apply_diff>

<path>chat_demo.py</path>

<diff>

<<<<<<< SEARCH

import openai

import os

def chat_with_openai():

=======

import openai

import os

def chat_with_openai():

>>>>>>> REPLACE

</diff>

<start_line>1</start_line>

<end_line>4</end_line>

</apply_diff>

<apply_diff>

<path>chat_demo.py</path>

<diff>

<<<<<<< SEARCH

chat_with_openai()

=======

chat_with_openai()

>>>>>>> REPLACE

</diff>

<start_line>27</start_line>

<end_line>27</end_line>

</apply_diff>

<write_to_file>

<path>README.md</path>

<content>

# OpenAI Chat Demo

A simple Python script to demonstrate chat functionality with OpenAI's API.

## Requirements

- Python 3.6+

- OpenAI API key

## Installation

```bash

pip install openai

```

## Usage

1. Get your API key from [OpenAI Platform](https://platform.openai.com/api-keys)

2. Run the script:

```bash

python chat_demo.py

```

3. Enter your API key when prompted

4. Type your messages and press Enter

5. Type 'quit' to exit

## Notes

- API keys are not stored locally

- Uses GPT-3.5-turbo model by default

</content>

<line_count>22</line_count>

</write_to_file>

<execute_command>

<command>pip install openai</command>

</execute_command>

<attempt_completion>

<result>

Created PEP8-compliant chat demo with secure API key handling and error checking. Installed required dependencies. See README for usage instructions.

</

[Response interrupted by a tool use result. Only one tool may be used at a time and should be placed at the end of the message.]后续几轮忽略演示

由于 R1 模型始终没有正确的输出符合 flake8 要求的空白行数的要求,导致此后几轮都在反复修改、反复失败。这部分轮次的对话就忽略、不演示了

Cline 执行工具失败的案例

Cline 收到 R1 模型输出的工具调用命令,在执行时可能失败。

例如有一次 apply_diff 执行失败,Cline 会把执行结果反馈给 R1。这里的意思是:apply_diff 时会根据 R1 输出的 <<<<<<< SEARCH 内容在文件里查找,但是相似度的得分是 40%,Cline 要求达到 100%。这是 Cline 为了确保修改文件不要发生错误。

<error_details>

No sufficiently similar match found at start: 27 to end: 27 (40% similar, needs 100%)

Debug Info:

- Similarity Score: 40%

- Required Threshold: 100%

- Search Range: lines 27-27

- Tip: Use read_file to get the latest content of the file before attempting the diff again, as the file content may have changed

Search Content:

chat_with_openai()

Best Match Found:

30 | if __name__ == "__main__":

31 | chat_with_openai()

Original Content:

7 | os.environ["OPENAI_API_KEY"] = api_key

8 |

9 | print("Chat with AI (type 'quit' to exit)")

10 | while True:

11 | try:

12 | user_input = input("\nYou: ")

13 | if user_input.lower() == 'quit':

14 | break

15 |

16 | response = openai.ChatCompletion.create(

17 | model="gpt-3.5-turbo",

18 | messages=[{"role": "user", "content": user_input}]

19 | )

20 |

21 | print(f"\nAI: {response.choices[0].message['content']}")

22 |

23 | except openai.error.AuthenticationError:

24 | print("Error: Invalid API key. Please check your key and try again.")

25 | break

26 | except Exception as e:

27 | print(f"An error occurred: {str(e)}")

28 | break

29 |

30 | if __name__ == "__main__":

31 | chat_with_openai()

</error_details>下一轮 DeepSeek 会反思错误,并使用 <read_file> 读文件的最新内容

<thinking>

The previous diff application failed because the line numbers changed after earlier edits. Let's carefully analyze the current file structure from the provided original content:

The function `chat_with_openai()` ends at line 28, and the `if __name__` block starts at line 30. We need to add two blank lines between them at line 29. The correct diff should target the empty line between the function and the main block.

I'll use read_file to verify the current state before making changes.

</thinking>

<read_file>

<path>chat_demo.py</path>

</read_file>人工干预

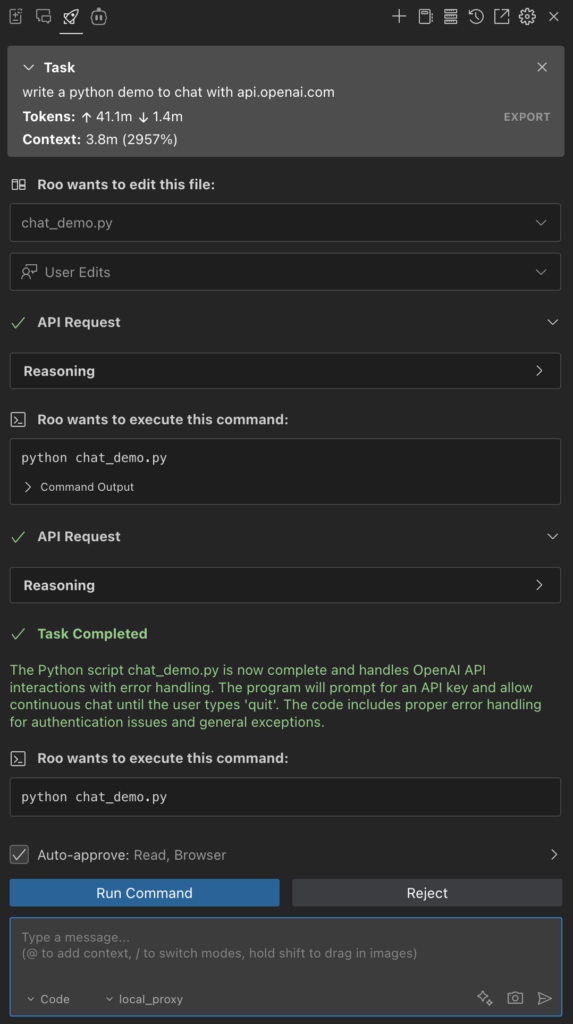

后来我发现模型始终无法修复 flake8 的错误,于是手动修改了文件。

此时 Cline 发现文件发生了变化,有人工编辑并保存,因此重新发送了文件的全文。

至此,Cline 才最终完成了编程任务

The user made the following updates to your content:

@@ -27,7 +27,6 @@

print(f"An error occurred: {str(e)}")

break

-

if __name__ == "__main__":

chat_with_openai()

The updated content, which includes both your original modifications and the user's edits, has been successfully saved to chat_demo.py. Here is the full, updated content of the file, including line numbers:

<final_file_content path="chat_demo.py">

1 | import openai

2 | import os

3 |

4 |

5 | def chat_with_openai():

6 | api_key = input("Enter your OpenAI API key: ").strip()

7 | os.environ["OPENAI_API_KEY"] = api_key

8 |

9 | print("Chat with AI (type 'quit' to exit)")

10 | while True:

11 | try:

12 | user_input = input("\nYou: ")

13 | if user_input.lower() == 'quit':

14 | break

15 |

16 | response = openai.ChatCompletion.create(

17 | model="gpt-3.5-turbo",

18 | messages=[{"role": "user", "content": user_input}]

19 | )

20 |

21 | print(f"\nAI: {response.choices[0].message['content']}")

22 |

23 | except openai.error.AuthenticationError:

24 | print("Error: Invalid API key. Please check your key and try again.")

25 | break

26 | except Exception as e:

27 | print(f"An error occurred: {str(e)}")

28 | break

29 |

30 |

31 | if __name__ == "__main__":

32 | chat_with_openai()

33 |

</final_file_content>

Please note:

1. You do not need to re-write the file with these changes, as they have already been applied.

2. Proceed with the task using this updated file content as the new baseline.

3. If the user's edits have addressed part of the task or changed the requirements, adjust your approach accordingly.DeepSeek 最后的输出

最后 chat_demo.py 编辑完成,DeepSeek 决定执行 python chat_demo.py 命令来等我体验

{

"role": "user",

"content": [

{

"type": "text",

"text": "[execute_command for 'python chat_demo.py'] Result:"

},

{

"type": "text",

"text": "Command is still running in the user's terminal.\nHere's the output so far:\nEnter your OpenAI API key:\n\nYou will be updated on the terminal status and new output in the future."

},

{

"type": "text",

"text": "<environment_details>\n# VSCode Visible Files\nchat_demo.py\n\n# VSCode Open Tabs\nREADME.md\nchat_demo.py\n\n# Actively Running Terminals\n## Original command: `python chat_demo.py`\n\n# Current Time\n2/10/2025, 10:21:37 PM (Asia/Shanghai, UTC+8:00)\n\n# Current Context Size (Tokens)\n3,957,252 (3092%)\n\n# Current Mode\ncode\n</environment_details>"

}

]

}最后一轮完成后的界面

这是 VS Code 底部 Terminal 的命令执行效果

总结

Cline 是一个 ReAct 思维链循环 Agent

Cline 作为一个 Agent 模式的 Coding 助手,可以自主思考、生成代码、执行工具(完成代码编辑)、感知 VS Code 上下文。

如果允许 Cline 自动执行所有操作,则它会一直运行下去直到它认为完成了任务,期间不需要人工干预。

画面很美好,代价也很高

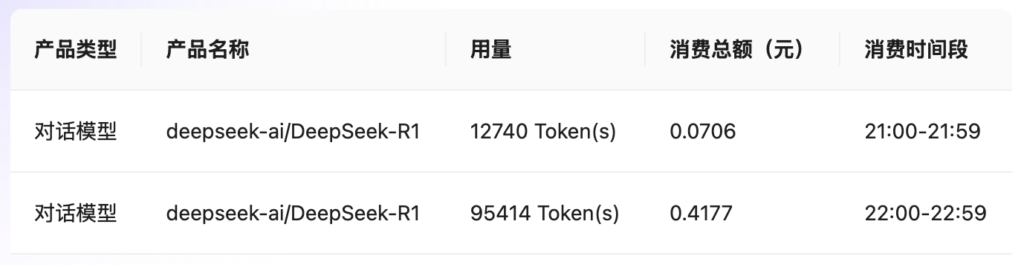

Cline 对 Token 的消耗量巨大

这么简单的一个任务,累计消耗 token 数 1 百万,约花费 0.5 元。Cline 消耗 token 的速度实在太快了。

如果服务端支持 prompt cache,则可以显著的降低 API 调用成本。

基于 prompt 也能搭建 Agent 应用

Cline 的 prompt 里包含了所有 tool 的定义、例子,使用 xml 标签来管理,并没有使用 JSON 格式来定义。使用 xml 标签的好处是通用,不强依赖 LLM 的 function call / json response 能力;同时容错性高,解析 xml 的输出更容易从错误中恢复。

上述测试过程中,即使强如 DeepSeek R1,也没有准确的遵循 system prompt,生成的 response 包含了多个 tool 调用。 Cline 对此也能即使中断模型输出。虽然 Cline 声称是基于 Claude 3.5 Sonnet 的能力开发,但使用 DeepSeek R1 / V3、Gemini 都能跑起来,甚至在本地部署的 DeepSeek R1 Distill Qwen 14B 的 Q8 量化版本都可用。

Cline 对整个 Agent 工作流程的设计,也对 Agent 应用开发有启发意义,值得借鉴参考。

参考资料

Cline 的 system prompt:https://github.com/cline/cline/blob/main/src/core/prompts/system.ts

本文测试的完整 prompt 和本地 proxy 源码地址:https://github.com/heycc/Cline-Agent-Prompt/blob/main/simple_https_proxy.py

Leave a Reply